UV3-TeD

While most state-of-the-art methods focus on generating textures in UV-space and thus inherit all the drawbacks of UV-mapping, we propose to represent textures with unstructured point-clouds sampled on the surface of an object and devise a technique to render them without going through UV-mapping.

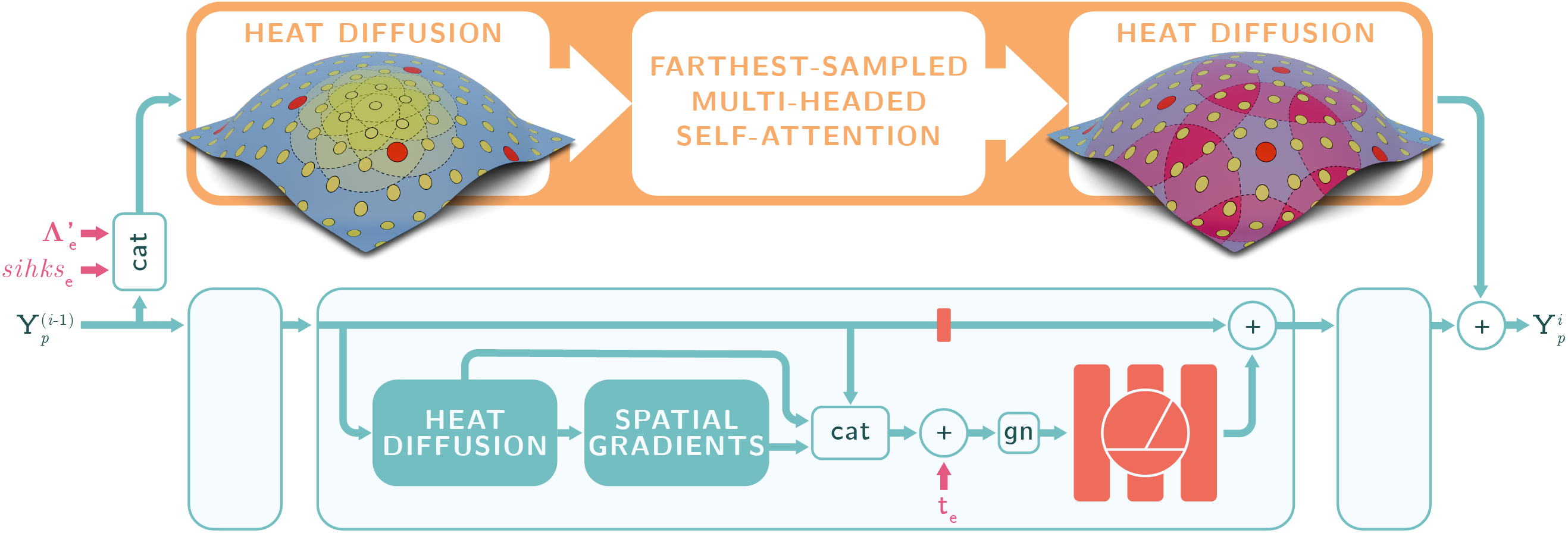

UV3-TeD generates point-cloud textures with a denoising diffusion probabilistic model operating exclusively on the surface of the meshes thanks to heat-diffusion-based operators specifically designed to operate on the surface of the input shapes.

These Attention-enhanced Heat Diffusion operators are at the core of UV3-TeD and are made of three consecutive Diffusion blocks (bottom) inspired by Sharp et al. (2020) and conditioned with a denoising time embedding as well as a diffused farthest-sampled attention layer (top). The proposed attention, conditioned with local and global shape embeddings, first spreads information to all the points on the surface, then computes a multi-headed self-attention on the features of the farthest samples (red points), and finally spreads them back to all the points with another heat diffusion.

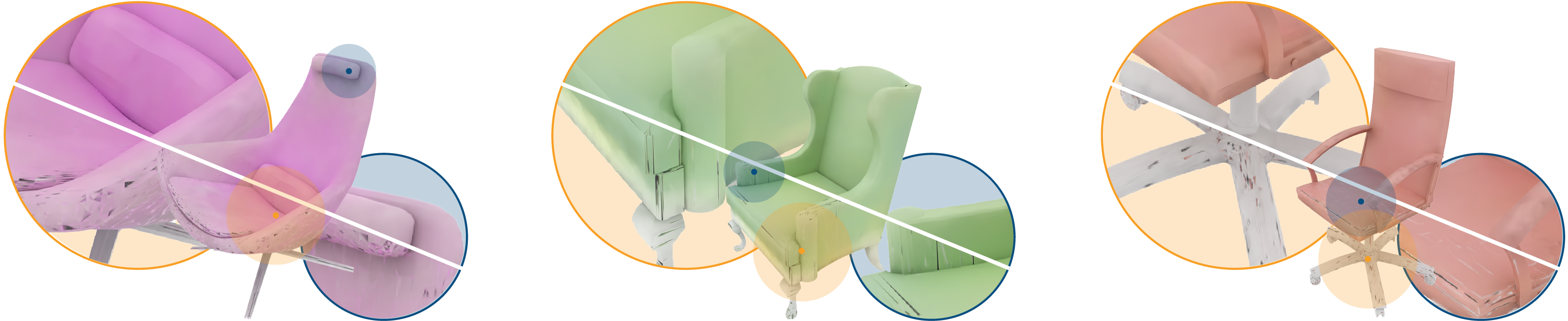

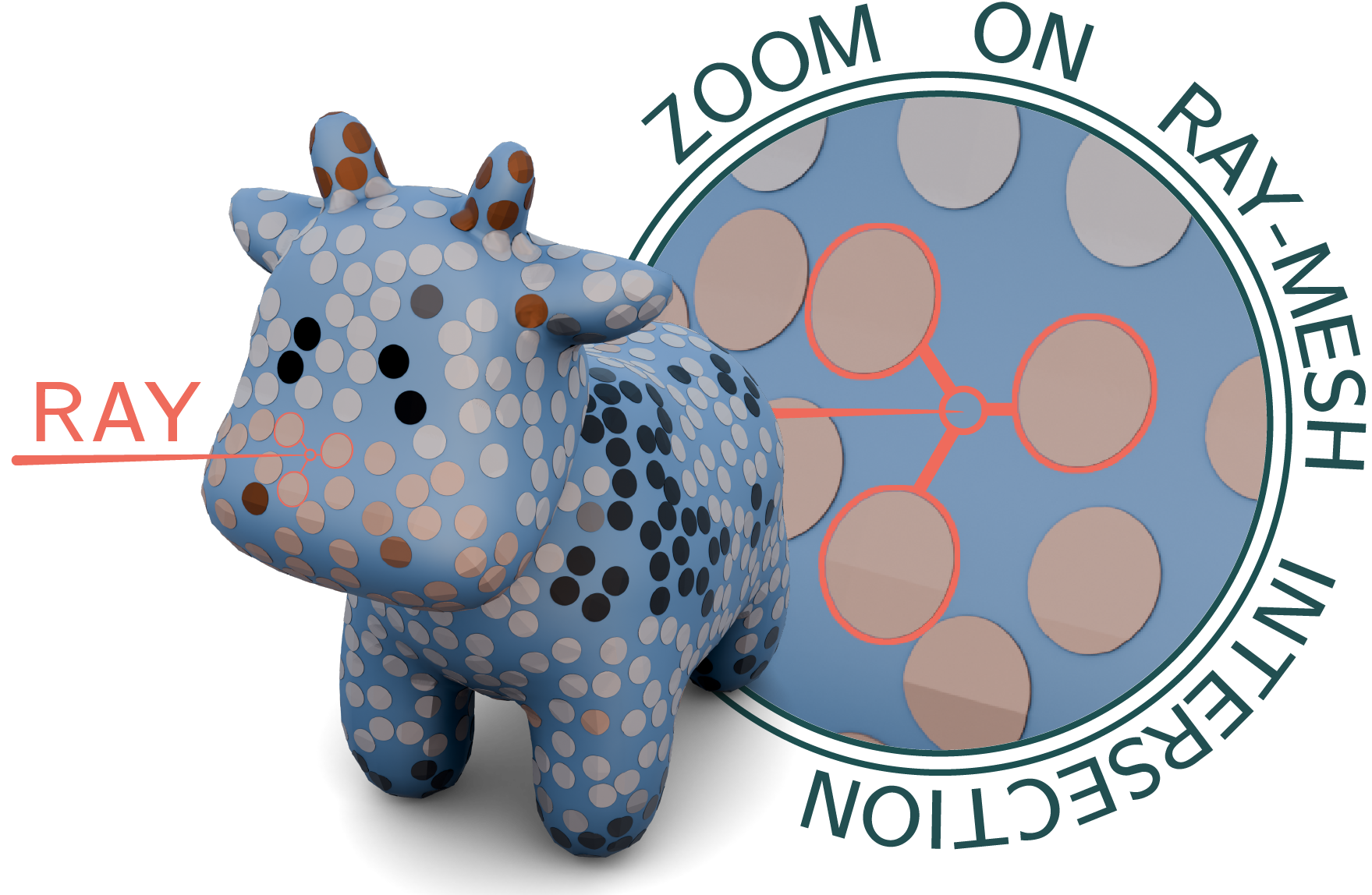

The point-cloud textures generated by UV3-TeD can be physically rendered by interpolating the colours of the three nearest texture points to the ray-mesh intersection. Directly rendering point-cloud textures (top-right halves) produce significantly better results than when projected on automatically wrapped UV-textures (bottom-left halves).